by Dr Kate Devitt, Chief Scientist, Trusted Autonomous Systems

“Military ethics should be considered as a core competency that needs to be updated and refreshed if it is to be maintained”

Inspector General ADF, 2020, p.508

The Centre for Defence Leadership & Ethics Australian Defence College has commissioned Trusted Autonomous Systems to produce videos and discussion prompts on the ethics of robotics, autonomous systems and artificial intelligence.

These videos for Defence members are intended to build knowledge of theoretical frameworks relevant to potential uses of RAS-AI to improve ethical decision-making and autonomy across warfighting and rear-echelon contexts in Defence.

Major General Mick Ryan says that he can “foresee a day where instead of having one autonomous system for ten or a hundred people in the ADF will have a ratio, that’s the opposite. We might have a hundred or a thousand for every person in the ADF”.

He asks, “how do we team robotics, autonomous systems and artificial intelligence (RAS-AI) with people in a way that makes us more likely to be successful in missions, from warfighting through to humanitarian assistance, disaster relief; and do it in a way that accords with the values of Australia and our institutional values?”

Wing Commander Michael Gan says, “robotics and autonomous systems have a great deal of utility: They can reduce casualties, reduce risk, they can be operated in areas that may be radioactive or unsafe for personnel. They can also use their capabilities to go through large amounts of data and be effective or respond very quickly to rapidly emerging threats”.

He goes on to say “however, because a lot of this is using some sort of autonomous reasoning to make decisions, we have to make sure that we have a connection with the decisions that are being made, whether it is in the building phase, whether it is in the training phase, whether it is in the data, which underpins the artificial intelligence, robotic autonomous systems”.

Trusted Autonomous Systems CEO, Professor Jason Scholz points out that “Defence has a set of behaviours about acting with purpose for defence and the nation; being adaptable, innovative, and agile; be collaborative and team-focused; and to be accountable and trustworthy to reflect, learn and improve; and to be inclusive and value others. All of these values and behaviours are included whether we are a ‘robotic and autonomous systems’ augmented force, or not”.

Managing Director of Athena Artificial Intelligence Mr Stephen Bornstein says, “When it comes to RAS-AI in Defence and ethics associated with them…. it’s very important to consider how a given company or a given AI supplier is establishing trust in that RAS-AI product”. He says that “ultimately, that assurance should be the most important thing before we start giving technologies to soldiers, seamen, or aircrew”.

Personnel engaging with the content should gain a clearer idea how to reflect on ethical issues that affect human and RAS-AI decision making in defence contexts of use including the limits and affordances of human and technologies to enhance ethical decision-making, as well as frameworks to help with RAS-AI development, evaluation, acquisition, deployment and review in Defence.

The videos draw on a framework from The Defence Science & Technology report ‘A Method for Ethical AI in Defence’ to help Defence operators, commanders, testers or designers ask five key questions about the technologies they’re working with.

- Responsibility – who is responsible for AI?

- Governance – how is AI controlled?

- Trust – how can AI be trusted?

- Law – how can AI be used lawfully?

- Traceability – how are the actions of AI recorded?

The videos consider four tools that may assist in identifying, managing and mitigating ethical risks in Defence AI systems.

https://theodi.org/article/data-ethics-canvas/

The ‘Data Ethics Canvas’ by the Open Data Institute encourages you to ask important questions about projects that use data and reflect on the responses. Such as the security and privacy of data collected and used, who could be negatively affected and how to minimise negative impacts.

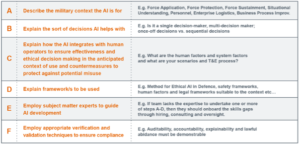

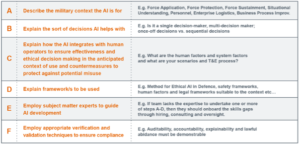

The AI Ethics Checklist ensures AI developers know: the military context the AI is for, the sorts of decisions being made, how to create the right scenarios, and how to employ the appropriate subject-matter experts, to evaluate, verify and validate the AI.

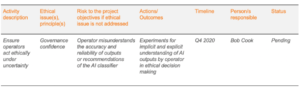

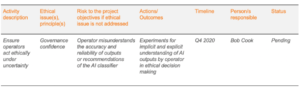

The Ethical AI Risk Matrix is a project risk management tool to identify and describe identified risks and proposed treatment. The matrix assigns individuals and groups to be responsible for reducing ethical risk through concrete actions on an agreed timeline and review schedule.