By Dr Jessica Korte, TAS Fellow

The Auslan Communication Technologies Pipeline (Auslan CTP) is a proposed AI-based language technology for Auslan (Australian Sign Language). I like to think of it as creating a prototype “Alexa for Auslan”. It is being developed using a participatory, human-centred design approach.

Why “Alexa for Auslan”?

Voice-based AI technologies are now commercially viable for “mainstream” languages, such as English, which have large amounts of spoken and written data available. Notable Deaf people, including Professor Christian Vogler of Gallaudet University, have identified a potential problem with this: if voice interfaces become more common for computers, because they are “faster” and “more natural”, then Deaf people may be left behind and locked out of using modern technologies. Alternate interfaces (e.g. typing on keyboards and reading response text) will not provide the same experience, may be slower, and will require a point of physical interaction – and as we found with the covid-19 pandemic, shared points of physical interaction can have unexpected risks.

Deaf people who want to use “conversational interfaces” in their native language will need smart technologies which recognise and respond in sign languages. Within Australia, that means Auslan.

A recent study by PhD student Abraham Glasser at the Rochester Institute of Technology found that Deaf people in the USA would use sign language assistants for weather updates; alerts, notifications, and warnings; and video-based communication. They would also use an assistant to search for information; connect to smart devices; set alarms, timers, events, and reminders; and manage notes and lists. The Auslan CTP will confirm if the Australian Deaf community has similar needs and develop a prototype to address these needs.

How will we make the Auslan Communication Technologies Pipeline?

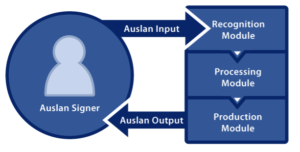

The Auslan CTP will be a 3-module system, which will:

- Recognise Auslan signing;

- Process Auslan signing and generate an appropriate response; and

- Sign a response in Auslan.

Each module will be built on a suite of AI tools, building on current state of the art to do things. For this recognition module, this will include converting video input of human signers into “skeletons” in a way that both protects the privacy of signers using the technology and reduces the complexity of computation.

The recognition module will also be able to identify the linguistic elements of signs. This will include the shape and orientation of the hands, the location and movement of signs, and the face and body movements which can alter the meaning of signs. Moving beyond the direct contact between Auslan signer and Auslan CTP, the module will also be able to identify the meaning of individual signs by comparing the linguistic elements to the context of the sentence being signed and also “understand” what has been signed based on the wider context of a conversation.

Finally, the production module of Auslan CTP will be able to generate meaningful responses to questions, interact with other machine systems, and generate an Auslan response through either animations or generated videos.

How does human-centred design apply to the Auslan CTP?

The goal of this project is to create a system that could be used by real human beings. The best way to do that, in my opinion, is to involve potential users in the design process. This is a known philosophy of design approaches such as participatory design, which celebrates that real users have abilities and insights that developers may not be aware of.

I want to work with Deaf people, to centre the project around their needs, abilities and insights into their language and the ways they use (and would like to use) technologies. This means that, from the start of data collection through to the production of a final prototype, we will be able to focus on vocabulary Deaf people want to use, to prompt the behaviours they desire and expect from such technology.

Community participation is even more important in the design and development of AI-based language technologies. Deaf people involved in the project will be able to make informed decisions about the language they are willing to share with researchers, and with the general public, as they contribute to the datasets which are required to train computers to understand Auslan and sign back; about how they want to give commands to a computer, and get feedback; and about how the final system should treat identifiable and non-identifiable data.

Work so farParticipatory design: Interviewing Australian Deaf people to find out they would like to interact with this device In the project to date, progress has been made in the development of the recognition and production modules as well as the creation of testing environments. Recognition module

Production module

Testing

|

What does this have to do with trusted autonomous systems in a Defence context?

Auslan is representative of types of communication which could be required in human-robot teaming. Gesture-based communication between humans and machines needs both theoretical and practical foundations from visual-gestural languages, such as Auslan, which formalise communication through gesture and movement

The same technologies which will enable the Auslan CTP should be able to support gesture-based interfaces for a wider range of machines, including robots used in operational contexts. If Alexa can control smart lights and air conditioners, a similar system should be able to issue commands to robots.

Possible military applications include

DST Kelpie projectThis project’s focus on interaction via lexicalised and non-lexicalised signing as used by diverse individual visual-gestural communicators provides a basis for machine recognition and understanding that could underlie DST’s Kelpie project. The research could result in gesture-based communication with machines through AI tools and techniques for understanding gesture, which are currently a gap for the sector. |

So why Auslan?

Designing a system for Auslan has the advantage of being able to work with members of the Australian Deaf community. As fluent signers, they are experts in visual-gestural communication, and use a language with a long history of utilising 3d space to convey meaning. As Auslan is a natural language, which has evolved over time in response to the expressive needs of its signers, it supports more fluid and natural interactions than artificially constructed sign systems or gesture sets, including military sign systems. This could lead to the development of more natural gestural interfaces for a wide array of technologies, from playing VR games to controlling surgical robots.

Hearing people have much to learn from Deaf people if we’re willing to turn off our ears and “listen” with our eyes.