Enabling COLREGs Compliance for Autonomous & Remotely Operated Vessels

By Robert Dickie1and Rachel Horne2

1Group Leader, Systems Safety & Assurance, Frazer Nash Consultancy Ltd

2Assurance of Autonomy Activity Lead, Trusted Autonomous Systems (TAS)

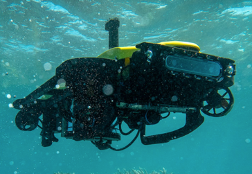

Autonomous vessels of various sizes, forms and speeds are already at sea, on the surface and beneath it. The International Regulations for Preventing Collisions at Sea (COLREGs), published by the International Maritime Organization (IMO) in 1960 and updated in 1972, govern the ‘rules of the road’ at sea. COLREGs describe the features that vessels must have to facilitate being seen and identified, define means of communication between vessels for the purposes of signaling intent, and most importantly they describe the navigational behaviors expected of vessels in proximity to one another, for the purposes of avoiding collision. It’s clear from the terms and phrases used in COLREGs that the authors didn’t conceive of navigational or operational decisions being made by computers, and compliance for autonomous vessels is difficult and not well-understood.

Autonomous systems technology does not replicate humans, it emulates some of their skills using a different set of ‘senses’ and decision-making processes and brings new capabilities to operations. This means that humans and autonomous technologies have different weaknesses, strengths, risks and mitigations.

The autonomous maritime industry has been wrestling with the challenge of ‘compliance’ with COLREGs for years, in terms of both understanding how it applies, and how to demonstrate compliance. The challenge for the designer or operator of an autonomous vessel is that the regulations are phrased from the underlying assumption that a human is operating the vessel. Where an autonomous control system is performing some or all of the functions a human previously would have been, it can be difficult to work out what constitutes ‘compliance’, in a practical sense, and in a way that the regulator, the Australian Maritime Safety Authority (AMSA), would accept. This difficulty can lead to additional costs, delay, and operations which are subject to more limitations than may be reasonable based on the actual risks presented.

Developing one-off COLREGs compliance cases for a single autonomous vessel is onerous for the designer or operator, and also causes AMSA difficulty in terms of the resources required to assess the compliance case, and ensure consistency in regulatory decision making. There are significant efficiencies to be gained for designers, operators, and regulators of autonomous vessels in the development of a repeatable compliance framework designed to reduce these burdens.

New TAS project to address COLREGs challenge

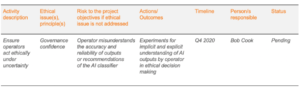

The TAS Assurance of Autonomy team have commenced a new project aimed at addressing the COLREGs challenge by developing an enabling framework which supports a practical and appropriate level of compliance for autonomous vessels. The project, known as the ‘TAS COLREGs project’, will consider the specific risks posed by the full spectrum of autonomous vessels, thus allowing flexibility in the operator’s approach to compliance. In order to provide maximum usefulness and future proofing, the approach will be to offer a range of risk mitigation options which address the ‘spirit’ of COLREGs, rather than defining a set of performance requirements or specification with which all autonomous vessels much comply.

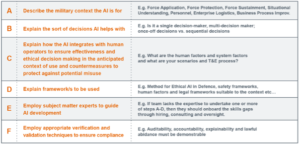

The guiding principles for the TAS COLREGs project (see Figure 1) are:

- The compliance methodology will be repeatable and scalable across a broad range of autonomous vessels.

- The underpinning philosophy of the compliance methodology will be logical, reasoned and justified by argument.

- The compliance methodology will be enabling rather than constraining whilst upholding the purpose and spirit of COLREGs.

- The process followed to use the compliance methodology will be simple to follow and supported by guidance.

- The project aims to develop a methodology which can be agreed to by the regulator.

Outputs of the TAS COLREGs project includes an operational tool and user guidance.

Stakeholder engagement will occur over the coming weeks to ensure the tool is fit for purpose and considered acceptable and appropriate by AMSA. This tool will be trialed with a variety of autonomous vessels in 2021, and then refined as necessary, before being released.

The TAS COLREGs project is being delivered by Frazer-Nash Consultancy and led by Robert Dickie, with the support of Marceline Overduin and Andrejs Jaudzems. Robert has extensive experience in the assurance of maritime autonomous systems, having been a member of the UK Maritime Autonomous Systems Regulatory Working Group (MASRWG) and having developed the initial draft of the Lloyd’s Register Code for Un-crewed Marine Systems.

The output of the TAS COLREGs project will be available on the TAS website as a useful resource for designers and operators in the form of a repeatable COLREGs compliance framework, supported by a tool and user companion guide.

The COLREGs resource will also be included in the Body of Knowledge Toolbox being developed by the TAS Assurance of Autonomy team under the National Accreditation Support Facility Pathfinder Project (NASF-P), which will assist designers, operators, regulators, and other stakeholders in the Australian autonomous systems ecosystem to navigate the assurance and accreditation process for autonomous systems efficiently and successfully.

If you would like to contact us in relation to the TAS COLREGs project, to offer feedback, suggestions, or request more information, please email us at NASFP@tasdcrc.com.au.