Authored by: Rain Liivoja, Eve Massingham, Tim McFarland and Simon McKenzie, University of Queensland

The incorporation of autonomous functions in weapon systems has generated a robust ethical and legal debate. In this short piece, we outline the international law framework that applies to the use of autonomous weapon systems (AWS) to deliver force in an armed conflict. We explain some of the reasons why using an AWS to deliver force is legally controversial and set out some of the ways in which international law constrains the use of autonomy in weapons. Importantly, it explains why users of AWS are legally required to be reasonably confident about how they will operate before deploying them. We draw on the work that the University of Queensland’s Law and the Future of War Research Group is doing to examine how the law of armed conflict (LOAC), and international law more generally, regulates the use of autonomous systems by militaries.

AWS are not prohibited as such

According to a widely used United States Department of Defense definition, an AWS is ‘a system that, once activated, can select and engage targets without further intervention by a human operator’. International law does not specifically prohibit such AWS. Some AWS might be captured by the ban on anti-personnel land mines or the limitation on the use of booby-traps, but there exists no comprehensive international law rule outlawing AWS as a class of weapons.

There are those who argue, for a variety of reasons, that an outright ban ought to be placed on AWS. We take no position in relation to that argument. We note, instead, that unless and until such time as a ban is put in place, the legality of AWS under international law depends on their compatibility with general LOAC rules, especially those that deal with weaponry and targeting.

AWS are not necessarily inherently unlawful under LOAC

Independently of any weapon-specific prohibitions (such as the ban on anti-personnel land mines), LOAC prohibits the use of three types of weapons:

• weapons of a nature to cause superfluous injury or unnecessary suffering (Additional Protocol I to the Geneva Conventions (API) article 35(3); Customary International Humanitarian Law (CIHL) Study rule 70);

• weapons of a nature to strike military objectives and civilians or civilian objects without distinction (API article 51(4); CIHL Study rule 71);

• weapons intended or expected to cause widespread, long-term and severe damage to the natural environment (API article 35(3); see, eg, CIHL Study rules 54 and 76).

Weapons falling into any of these categories are often described as being ‘inherently unlawful’. LOAC requires States to make an assessment about whether a new weapon would be inherently unlawful (API article 36). The relevant test scenario is the normal intended use of the weapon. This means that being able to envisage a far-fetched scenario in which superfluous injury or indiscriminate effects could be avoided does not make the weapon lawful. Conversely, the possibility of a weapon causing superfluous injury or indiscriminate effects under some exceptional and unintended circumstances does not make the weapon inherently unlawful.

A specific AWS, just like any other weapon, can fall foul of one of these three general prohibitions. Such an AWS would then be inherently unlawful, and its use would be prohibited. But it is impossible to say that all AWS would necessarily be prohibited by one of these three principles. In other words, we cannot conclude that all AWS are inherently unlawful.

We note, at this juncture, the Martens Clause, contained in many LOAC instruments, whereby in the absence of specific treaty rules ‘civilians and combatants remain under the protection and authority of the principles of international law derived from established custom, from the principles of humanity and from the dictates of public conscience.’ There are considerable disagreements about the interpretation of this clause generally, as well as its significance to weapons. Considering that the general rules concerning weapons (outlined above) have now been codified in the Additional Protocols, it is our view that the Martens Clause has limited relevance to weapons post 1977.

AWS must be used in compliance with LOAC

LOAC imposes obligations to select and deploy weapons so as to limit the effects of armed conflict. These legal obligations are held by individuals and States. This has been expressly acknowledged by the Group of Governmental Experts on Lethal Autonomous Weapons Systems, who have noted that LOAC obligations are not held by AWS. It is up to the individual(s) using an AWS, and the State that has equipped them with the system, to make sure that they are doing so consistently with their legal obligations.

There are three key LOAC principles with which the user of an AWS must comply:

• The principle of distinction requires belligerents to direct their attacks only against lawful military objectives (combatants, members of organised armed groups, other persons taking a direct part in hostilities, and military objects) and not to direct attacks against civilians or civilian objects (API articles 48, 51 and 52; CIHL Study rule 1).

• The principle of proportionality requires that attacks cause no harm to the civilian population (‘collateral damage’) that was foreseeably excessive in comparison to the military advantage anticipated from the attack (API article 57(2)(b), CIHL Study rule 14).

• Those who plan or decide upon attacks must take feasible precautions to verify the lawfulness of targets, to minimise collateral damage, and, where possible, to give advance warnings to affected civilians (API article 57(2); CIHL Study rule 15).

These are obligations which related to attacks. Attacks comprise acts of violence deployed in both offensive and defence capacities (API article 49(1)) which may or may not have lethal effect. Therefore, these three rules are applicable to all attacks – regardless of whether or not they are offensive or defensive and regardless of whether they are ultimately lethal.

There is also an overarching general duty to exercise “constant care” to spare the civilian population, civilians, and civilian objects in all military operations (API article 57(1), CIHL Study rule 15).

These principles seek to balance the often competing interests of military necessity and humanity. They are not easy to apply in practice. In particular, in relation to precautions in attack, there are legitimate questions about how precautions ought to be taken when a weapons system has autonomous capabilities. What is clear, however, is that the obligation to take precautions is an obligation of the user of the AWS, not an obligation of the AWS itself.

The lawfulness of AWS use depends on the expected consequences

Compliance with the three LOAC principles mentioned above is straightforward when simple weapons are used in the context of close combat. The principles of distinction and proportionality do not pose much difficulty when a combatant stabs an enemy with a bayonet or shoots them at a close range.

Warfare has, however, evolved so that many attacks are carried out by means of increasingly sophisticated technology. Thus, in many instances, compliance with distinction and proportionality entails using technology in the expectation that it will lead to outcomes normally associated with the intended use.

Under current law, combatants can rely on complex weapon systems, including AWS, if they can do so consistently with their LOAC obligations. This means a lot rides on just how confident the combatant is that the weapon will behave as expected in the circumstances of use. The potential complexity of AWS raises some thorny technical, ethical and legal questions.

LOAC has never been prescriptive about the level of confidence required, but it is clear an individual need not be absolutely sure using a particular weapon will definitely result in the desired outcome. A ‘reasonable military commander’ standard, articulated in the context of assessing proportionality of collateral damage, would arguably be applicable here. Consider a military commander who deploys a tried and tested artillery round against a verified military objective that is clearly separated from the civilian population. The commander will not be held responsible for an inadvertent misfire that killed civilians. It is also clear that there will be ‘room for argument in close cases’ (Final Report to the Prosecutor by the Committee Established to Review the NATO Bombing Campaign Against the Federal Republic of Yugoslavia, para 50). Indeed, a number of States have clarified that military decisions must only be held to a standard based on the information ‘reasonably available to the [decision-maker] at the relevant time’ (eg. API, Reservation of the United Kingdom of Great Britain and Northern Ireland, 2 July 2002, (c)).

The situation is made more difficult by the challenge of predicting how an autonomous system will behave. Some AWS may be such that a user will never be able to be confident enough about the outcome that its use would achieve in the circumstances of the proposed attack. The use of such a weapon would therefore never be lawful. However, it is difficult to ascertain this without looking at a specific system. It seems clear to us that the lawful use of AWS under LOAC will require a comprehensive approach to testing and evaluating the operation of the system. In addition, the individuals using the weapons will have to be equipped with enough knowledge to understand when the use of an autonomous system will perform consistently with the LOAC obligations of distinction, proportionality and precautions in attack. Put simply, the operator/s has to understand what will happen when they use the system.

This level of understanding is clearly possible. A number of weapons systems with a high level of autonomy are already in use (e.g. the Aegis Combat System used on warships) in environments where there is evidence-based confidence about how they operate. This confidence allows the individual responsible for their deployment to comply with their legal obligations.

Some autonomous systems will be able to be used in a variety of contexts. Where this is the case, the lawfulness of a decision to deploy the system in any one case will depend on an assessment of the both the system’s capabilities as well as the environmental conditions.

The following example illustrates this point.

Scenario

A commander wishes to deploy a land-based AWS that is designed to identify and target vehicles that are armed. The system has been tested and approved by the State pursuant to its Article 36 legal review obligations. The system cannot clearly identify persons. The commander understands the system and its capabilities. The environment will dictate how it can be used – with what precautions, according to what parameters, on what setting.

Environment – Designated geographic zone that is secured and patrolled such that civilians are not within the area. Possible implications for lawful use of the AWS – legally unproblematic and operationally valuable.

Environment – area with heavy military presence. Intelligence suggests farmers in the area routinely carry small arms and light weapons for use against predatory animals. Possible implications for lawful use of the AWS – could be facilitated by specific settings and parameters in order to take into account the environment and context in which it is operating.

Environment – Dense urban setting with high rates to civil unrest and crime. Possible implications for lawful use of the AWS – would entail a significant degree of risk to civilians and would be legally problematic.

The environment and circumstances are key

There is no hard and fast rule that an AWS can be lawfully used in a particular context. The user must evaluate the system to ensure that, in the circumstances in which they seek to deploy it, they will be complying with their LOAC obligations.

This evaluation will come from understanding the weapon and how it operates, understanding the environment in which they wish to deploy it, and understanding the task for which they wish to deploy it. It may be possible to lawfully balance the military advantage that comes from high levels of automation with the risks of unintended consequences in one environment, and yet not in another environment. For example, sea mines, by virtue of the environment of their use, are able to have a high level of automation and still comply with the LOAC principles outlined above. However, if that same level of automation was to be used in a similar weapon on land this may result in greater likelihood of a violation of the LOAC principles.

At its heart, delegation of the use of force to an autonomous system requires those that decide to deploy them understand the consequences of their operation in the environment of use. This means that there will be no clear-cut answers, and the legality of a decision to deploy an AWS will depend on the context.

________________

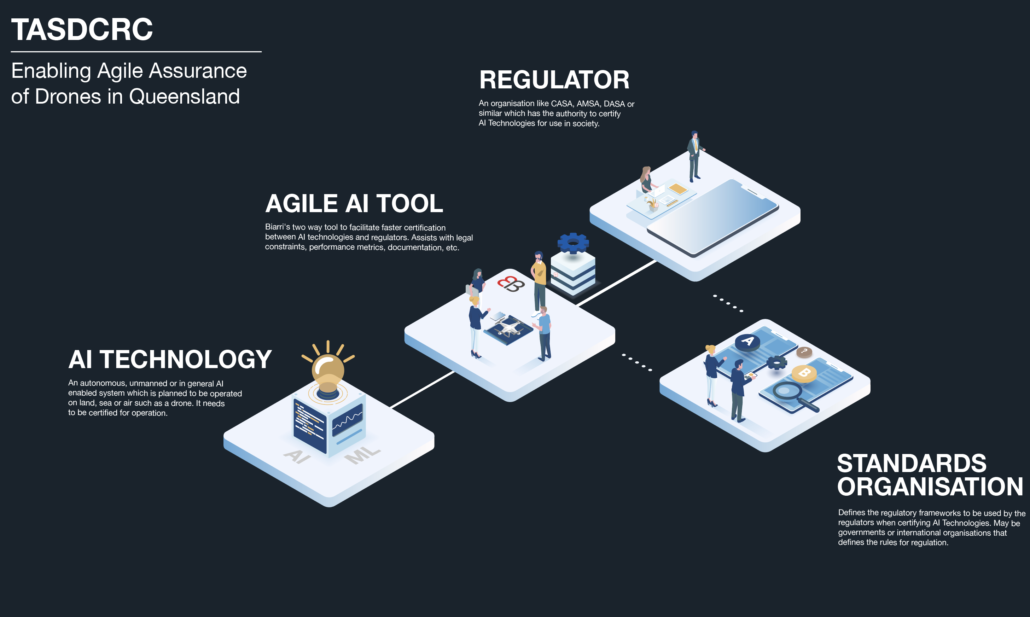

This blog post is part of the ongoing research of the Law and the Future of War Research Group at the University of Queensland. The Group investigates the diverse ways in which law constrains or enables autonomous functions of military platforms, systems and weapons. The Group receives funding from the Australian Government through the Defence Cooperative Research Centre for Trusted Autonomous Systems and is part of the Ethics and Law of Trusted Autonomous Systems Activity.

The views and opinions expressed in herein are those of the authors, and do not necessarily reflect the views of the Australian Government or any other institution.

For further information contact Associate Professor Rain Liivoja, Dr Eve Massingham, Dr Tim McFarland or Dr Simon McKenzie.