New TAS project to develop a Detect and Avoid (DAA) Design, Test and Evaluation (DT&E) guideline for low-risk, uncontrolled airspace outside the airport environment

By Tom Putland – Director of Autonomy Accreditation – Air

The development of best practice policy, appropriate standards, and a strong assurance and accreditation culture has the potential to enhance innovation and support market growth for drones with autonomous abilities in the maritime, air and land domains.

The Trusted Autonomous Systems (TAS) National Accreditation Support Facility Pathfinder Project (NASF-P), under the Assurance of Autonomy Activity, represents an opportunity to unlock Queensland’s, and by extension Australia’s, capacity for translating autonomous system innovation into deployments, given the existing test facilities operating in Queensland, the existing industry need identified and strong government backing.

The overarching purpose of the NASF-P is to:

- Make it easier to design, build, test, assure, accredit, and operate an autonomous system in Australia, without compromising safety; and

- Support and promote Queensland’s existing test ranges; and

- Encourage both domestic and international business to operate in, and use Queensland as a base for the purpose of testing, assuring, and accrediting autonomous systems; and

- Investigate, design, and facilitate the creation of an appropriate, independent third party entity that can continue to support the design, build, test, assurance, accreditation, and operation of autonomous system in Australia, by bridging the gap between industry, operators, and regulators.

New project: Development of a DAA DT&E guideline

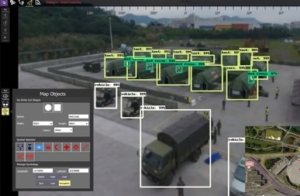

In the air domain specifically, the largest impediment to the integration of unmanned aircraft (regardless of autonomy) into the National Airspace System (NAS) is complying with the intent[1] of the See and Avoid (SAA) requirements detailed within Regulations 163 and 163A of the Civil Aviation Regulations 1988 (Cth), particularly in Beyond Visual Line of Sight (BVLOS) operations. In lieu of a solution, operators and the regulator must go through a labour-intensive stakeholder engagement process with all aviation parties to prevent mid-air collisions. This approach will not scale to the projected numbers of Unmanned Aerial Systems (UAS) operations into the future.

An autonomous/highly automated DAA system that complies with the safety objectives of CAR 163 and CAR 163A is a key enabling technology for integration into complex Australian airspace and will form an integral part of the safety assurance framework for UAS operations into the future.

The TAS team have initiated a new project, led by Revolution Aerospace, to develop a new Detect and Avoid (DAA) Design, Test and Evaluation (DT&E) guideline for low-risk, uncontrolled airspace outside the airport environment. This is particularly relevant to Australian unmanned aircraft operations.

Dr Terry Martin (CEO) and the Revolution Aerospace team have a wealth of world-leading experience in Detect and Avoid, Machine Learning, Safety Assurance, Verification & Validation (V&V), and Test & Evaluation (T&E), representing Australia at international forums such as NATO, JARUS, and RTCA (to name a few).

Terry is supported in this project by TAS’s Tom Putland – Director of Autonomy Assurance in the Air Domain. Tom has extensive experience in the regulation and safety assurance of UAS, having previously worked for CASA, and being CASA’s representative at JARUS and other international working groups. This team has the expertise, drive, and ability to solve this critical airspace problem for Australia.

DT&E guideline will create a process acceptable to CASA[2] that allows:

- the derivation of high-level safety objectives; and

- development of Verification and Validation (V&V) requirements; and

- the conduct of relevant simulations and tests to demonstrate compliance with the safety objectives; and

- the collation of compliance process and data into a package to support regulators (e.g. CASA) in issuing an approval for the operation.

TAS will engage closely with key stakeholders, including CASA, the Australian Association for Unmanned Systems (AAUS) and other industry members to ensure the process reflects current best practice, and is appropriate and useful for the Australian aviation industry. The intent is for the new process to be available for testing by the end of the year.

Upon completion of this project a subsequent project will be undertaken to work with industry partners and the Queensland Flight Test Range, Australia’s first and only commercial flight test range, to utilise and comply with this guideline. Completion of these projects will increase the access and flexibility available for unmanned aircraft operations in Australian airspace.

If you are interested in learning more about these projects, be involved as an industry test partner, or discuss specifics (i.e. EO/IR sensing, classifiers/learning assurance, alerting and avoidance logic, formal verification, T&E), please email tom.putland@tasdcrc.com.au.

Other NASF-P projects underway

The NASF-P team have a number of projects underway, including:

- Preparation of a Body of Knowledge on the assurance and accreditation of autonomous systems;

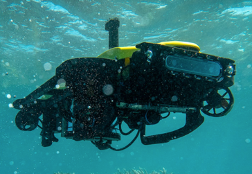

- Maritime domain: development of a repeatable, regulator-accepted methodology to demonstrate compliance with COLREGS for autonomous and remotely operated vessels; and

- Preparation of a business case for a new, independent, National Accreditation Support Facility, based in Queensland, that will better connect operators and regulators to facilitate more efficient assurance and accreditation.

If you would like to find out more about our work, or provide feedback on where you see the key risks and opportunities for the autonomous systems industry in Australia, please contact us as NASFP@tasdcrc.com.au.

[1] Through an approval under Subregulation 101.073(2) and Regulation 101.029 of the Civil Aviation Safety Regulations 1998 (Cth).

[2] Regular engagement with CASA will assist to ensure the final process is acceptable to them, but note it has not been approved at this point in time. In the future, the process is intended to form part of an acceptable means of compliance for a BVLOS approval.