By Tara Roberson, TAS Trust Activities Coordinator & Kate Devitt, TAS Chief Scientist

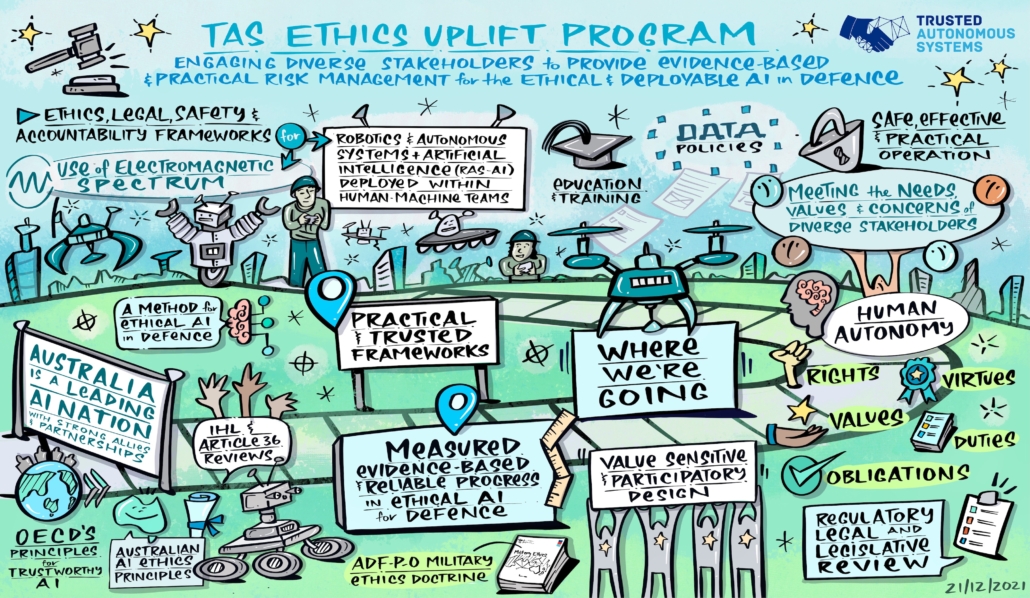

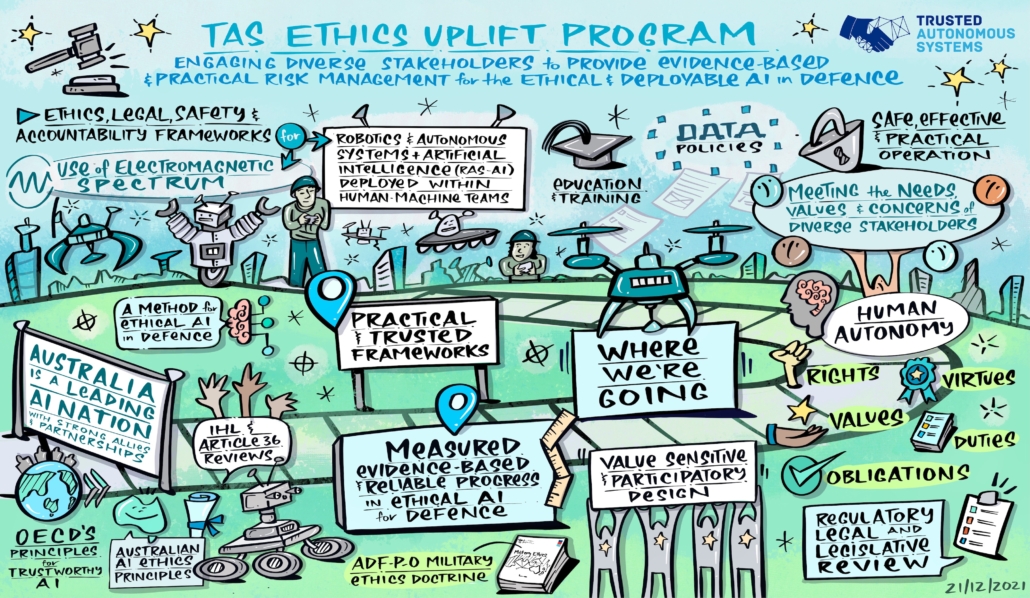

The Trusted Autonomous Systems (TAS) Ethics Uplift Program (EUP) supports theoretical and practical ethical AI research and provides advisory services for industry to enhance capacity for building ethical and deployable trusted autonomous systems for Australia’s Defence.

In 2021, the EUP celebrated the release of technical report A Method for Ethical AI in Defence by the Australian Department of Defence, co-authored by TAS, RAAF Plan Jericho, and Defence Science & Technology Group (DSTG).

EUP conducted Article 36 review and international humanitarian law workshops and produced a series of videos on the ethics of robotics, autonomous systems, and artificial intelligence for the Centre for Defence Leadership and Ethics (CDLE) Australian Defence College.

We appointed two new Ethics Uplift Research Fellows – Dr Christine Boshuijzen-van Burken and Dr Zena Assaad and produced ‘A Responsible AI Policy Framework for Defence: Primer’.

2022 promises to be an even busier year with several projects underway, including a new suite of ten videos for CDLE.

Workshop in November 2021

In November 2021, Trusted Autonomous Systems (TAS) co-hosted their annual workshops on the ethics and law of autonomous systems with the UQ Law and the Future of War Research Group.

The ethics component of these workshops brought together ethics researchers, consultants, Defence, Government, and industry to discuss current best practise approaches to ethics for robotics, autonomous systems, and artificial intelligence (RAS-AI) in Defence. It also showcased recent work from the TAS Ethics Uplift program.

The workshop aimed to increase awareness of Australian artificial intelligence governance frameworks in civilian and military applications; share practical tips and insights on how to ensure ethical AI in Defence projects; and connect our ethics, legal, and safety experts.

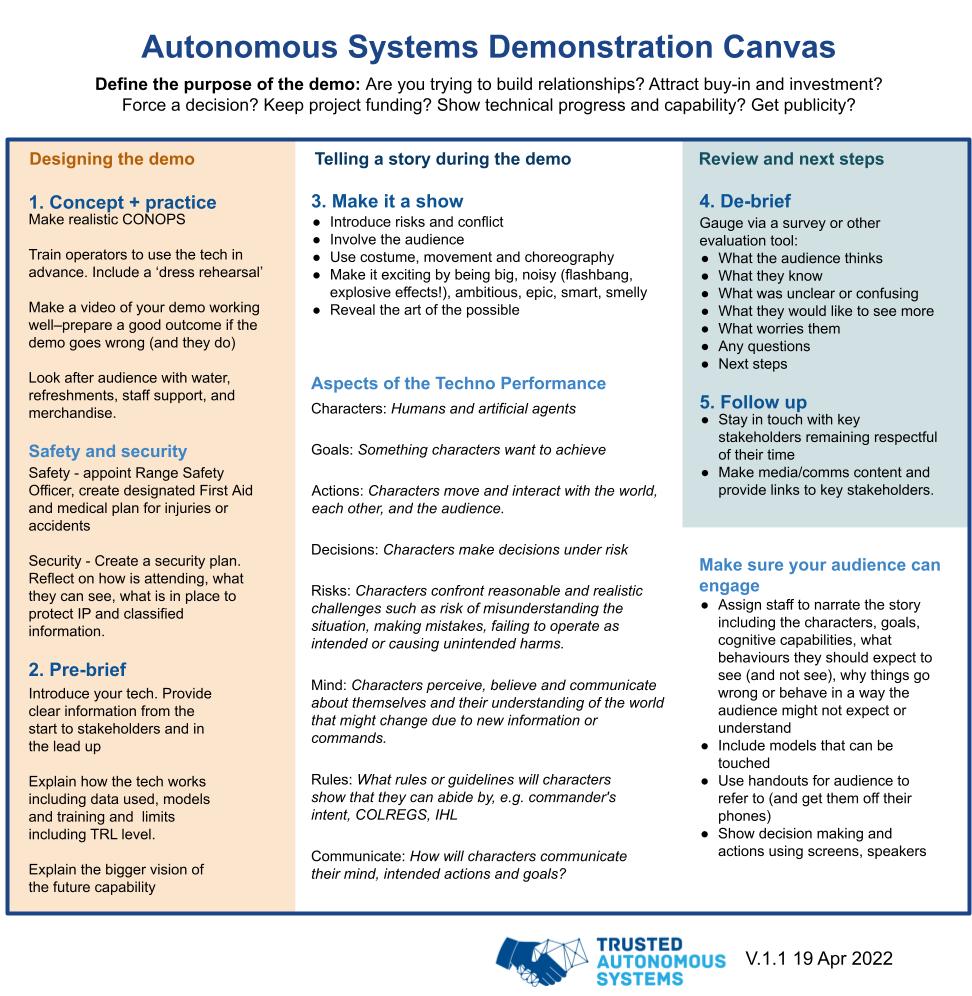

We have summarised the key points from the workshop presentations. These summaries have also been visualised by sketch artist Rachel Dight in the image above.

Australia’s approach to AI governance in security and Defence

TAS Chief Scientist Dr Kate Devitt opened the workshop with a presentation on the Australian approach to AI governance in Defence, based on a forthcoming chapter in AI Governance for National Security and Defence: Assessing Military AI Strategic Perspectives. Routledge.

Australia is a leading AI nation with strong allies and partnerships. Australia has prioritised the development of robotics, AI, and autonomous systems to develop sovereign capability for the military. The presentation summarised the various means used by Australian to govern AI in security and Defence. These include:

Ethical AI in Defence Case Study: Allied Impact

Christopher Shanahan (DSTG) presented on research led by Dianna Gaetjens (DSTG) conducting a case study of ethical considerations of the Allied IMpact Command and Control (C2) system using the framework developed in A Method for Ethical AI in Defence.

The research found that way AI is developed, used, and governed will be critically important to its acceptance, use and trust.

The presentation recommended that Defence develop an accountability framework to enhance understanding over how accountability for decisions made with AI will be reviewed.

There also needs to be significant education and training for personnel who will use AI technology and we need a comprehensive set of policies to guide the collection, transformation, storage, and use of data within Defence.

How to Prepare a Legal and Ethical Assurance Project Plan for Emerging Technologies Anticipating an Article 36 Weapons Review

Damian Copeland (International Weapons Review) presented on preparing a Legal and Ethical Assurance Project Plan (LEAPP) for AI projects in anticipation of Article 36 reviews.

A LEAPP is an evidence-based, legal and ethical risk identification and mitigation process for AI projects about a certain threshold (i.e. AI projects that are subject to an Article 36 weapons review) recommended in A Method for Ethical AI in Defence.

The LEAPP designs a contractor’s plan for ensuring the AI meets Commonwealth requirements for legal and ethical assurance.

LEAPP review should occur at all stages of a product’s life cycle, including during strategy and conception, risk mitigation and requirement setting, acquisition, and in-service use and disposal.

A LEAPP could directly contribute to Defence’s understanding of legal and ethical risks associated with particular AI capabilities.

Ethical Design of Trusted Autonomous Systems in Defence

Dr Christine Boshuijzen-van Burken – a new TAS Ethics Uplift Research Fellow – presented on her new project on ethical design of trusted autonomous systems in Defence.

An important part of the project will be devoted to answering the following question: What values do the Australia public prioritise when it comes to the design of AI for Defence? To achieve this, close attention should be paid to the needs, values, and concerns of diverse stakeholders. These stakeholders include: developers, humanitarian organisations, Defence, and the Australian public.

This research aims to build an ethical framework based on value sensitive design which will help developers of autonomous systems in Defence to think about the ethical aspects of their technologies.

It will attempt to answer the question: What values do the Australia public prioritise when it comes to the design of AI for Defence?

This project will also produce case studies on the use of the ethical framework.

Human-Machine Team (HUM-T) Safety Framework for cross-domain networked autonomous systems in littoral environments

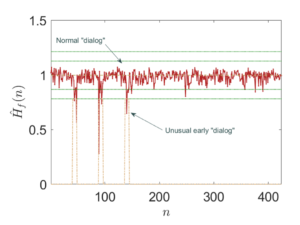

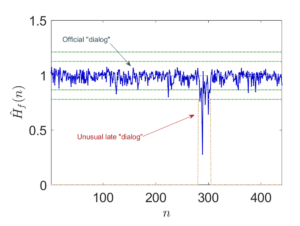

Dr Zena Assaad – a new TAS Ethics Uplift Research Fellow – presented on her new project on the complex problem of human-machine teaming with robotics, autonomous systems, and AI.

Human-machine teaming broadly encompasses the use of autonomous or robotic systems with military teams to achieve outcomes that neither could deliver independently of the other.

This concept describes shared intent and shared pursuit of an action and outcome.

This research aims to develop a safety framework for human-machine teaming operations to enable safe, effective, and practical operation for Defence. Safety, here, includes physical safety and psychosocial safety.

Ethical and Legal Questions for the Future Regulation of Spectrum

Chris Hanna – a lawyer in the ACT, previous Legal Officer for Defence Legal, and TAS Ethics Uplift consultant – presented on the ethical and legal questions facing the future regulation of spectrum in Australia.

Autonomous systems depend on electromagnetic spectrum access to ensure efficient, effective, ethical, and lawful communication between humans and machines and between teams. Systems that manage spectrum provide command support and sustained communications, cyber, and electromagnetic activities. Because spectrum is a fixed resource, increasing demand requires efficient, effective, ethical, and lawful management that is considerate of privacy and other rights to communicate.

As part of his work with the TAS Ethics Uplift Program, Chris will contribute to a TAS submission for Department of Home Affairs Reform of Australia’s electronic surveillance framework discussion paper in 2022.

The Ethics of the Electromagnetic Spectrum in Military Contexts

Kathryn Brimblecombe-Fox – artist, PhD candidate at Curtin University, and Honorary Research Fellow at UQ – presented on her recent work on the military use of the electromagnetic spectrum [EMS].

Militaries around the world are increasingly interested in the EMS as an enabler of technology, a type of fires, a ‘manoeuvre’ space, and a domain. Kathryn suggests that the contemporary theatre of war is now staged beyond geographies of land, air, and sea. It is now staged in space, cyberspace, and the electromagnetic spectrum – all domains permeable to each other to allow interoperability and joint force ops.